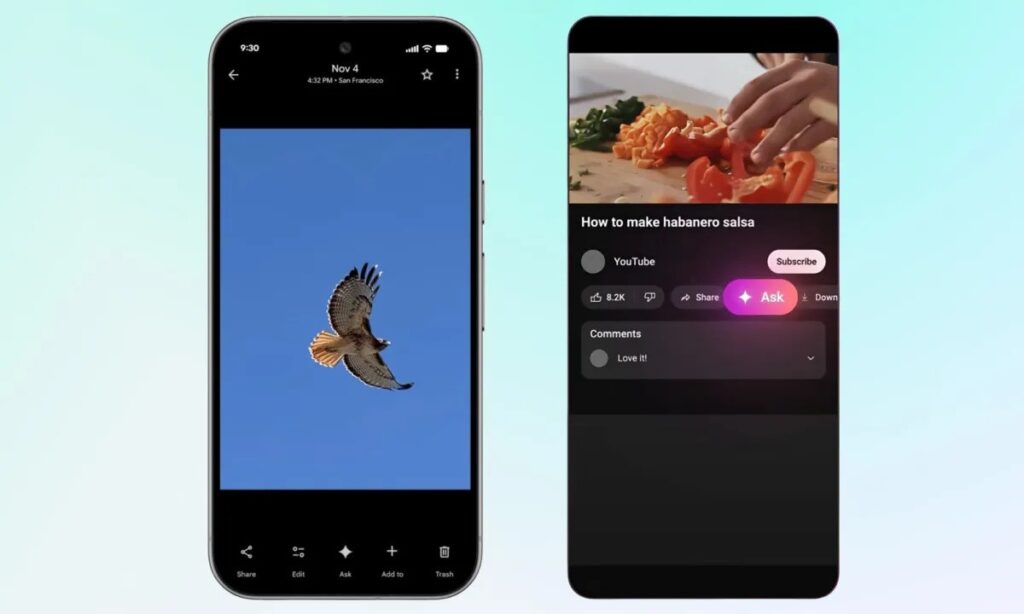

Google is quietly reshaping how people interact with their content. The company has started rolling out its new Ask feature inside Google Photos and YouTube, giving users a way to search, summarize and understand their own media through natural conversation rather than traditional keyword queries.

The experience is powered by Google’s Gemini AI model, which blends visual understanding with text processing to deliver answers in real time. It’s part of Google’s ongoing push to make AI feel native inside the apps people already rely on. You can see Google’s broader AI direction reflected in the latest Gemini updates as wel.

Inside Google Photos, Ask acts as a conversational layer on top of your library. Instead of endlessly scrolling or trying to remember specific dates, you can simply say “Show me photos from my Paris trip” or “Find pictures of my dog at the beach” and get instant results without typing specific search terms. Ask can also help with editing tasks. You can describe adjustments like “Make the sky brighter” or “Fix the lighting here” and Photos applies those changes immediately. Google has been expanding AI editing for a while, including the Magic Editor tools explained.

A similar Ask button is surfacing on YouTube for select users on desktop and mobile. It’s built to work as the video plays, which means you don’t have to pause or scrub through the timeline to find information. You can request a summary, ask what the video is about, get explanations of concepts or even extract recipe ingredients. For example, you can ask “List all the ingredients shown so far” and Gemini will generate a clean answer using contextual understanding from the video itself. YouTube has been testing several AI features recently, including conversational Q&A tools covered, highlighting Google’s focus on interactive video search.

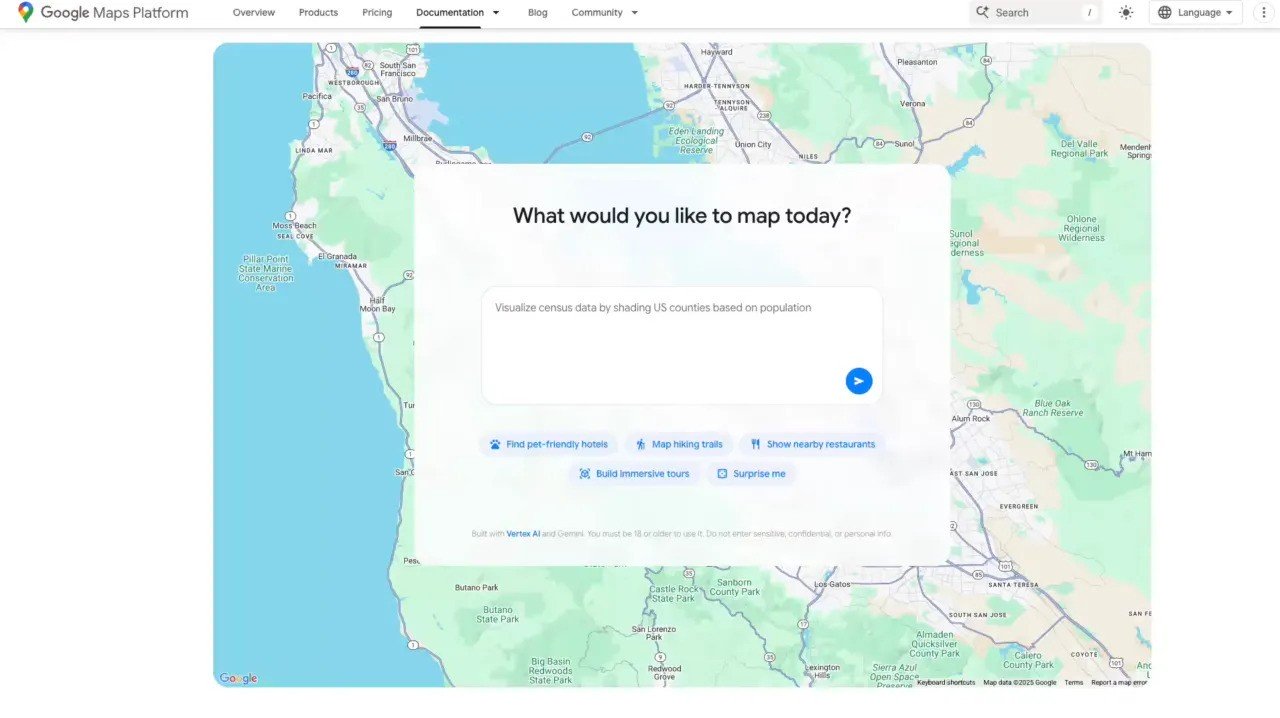

This marks a significant shift in how people navigate visual content. Traditional search works best with structured information, but everyday photos and long videos don’t always fit that model. Ask changes that dynamic by letting users speak naturally, tapping into Gemini’s multimodal reasoning to interpret visuals and text together.

Google also says these conversations are not used for advertising and that responses are generated on the spot, addressing concerns around how personal data and private images might interact with AI models.

Ask Photos is currently available to adults in the United States and will expand to more than one hundred countries and seventeen new languages in the coming week. The YouTube version is rolling out gradually on select English language videos across Android, iOS and desktop. Users can find the Ask button below the video player, positioned between the Share and Download options.

For Google, the deeper goal is to make AI feel seamless inside platforms people already use daily. Photos, with more than a billion users worldwide, and YouTube, with billions of daily views, are ideal places to introduce that next step in AI guided search. It’s a move that brings Google closer to true AI native apps where people don’t just search or scroll, but start real conversations with their content.