One of the biggest tech stories this year arrived in a surprisingly short announcement. Apple confirmed that Google’s Gemini will help power the next generation of Siri and Apple Intelligence, marking a major shift in how AI features will work across iPhone, iPad, and Mac. It is a move that quietly says a lot about where Apple stands in the AI race and what users can realistically expect next.

In a joint statement, Apple revealed that Gemini will play a central role in rebuilding Siri and shaping future AI-driven software experiences. According to Apple, these models will support upcoming Apple Intelligence features, including a far more personal and capable version of Siri rolling out later this year. For everyday users, this could finally mean an assistant that understands context, handles complex requests, and actually gets things done.

This partnership is also a big win for Google. Apple reportedly tested alternatives such as OpenAI’s GPT models and Anthropic’s Claude before settling on Gemini. Choosing Google’s AI platform signals strong confidence in Gemini’s real-world performance and scalability. It also quietly admits that Apple could not keep pace with companies like Google, Meta, and OpenAI on large language models alone.

If you have followed Siri’s uneven progress over the years, this shift has felt inevitable. Apple has struggled to turn Siri into a truly intelligent assistant, while rivals pushed ahead with more conversational and capable AI systems. Now, with Gemini in the mix, Apple finally has access to a mature AI backbone to build on.

Privacy still matters, maybe more than ever

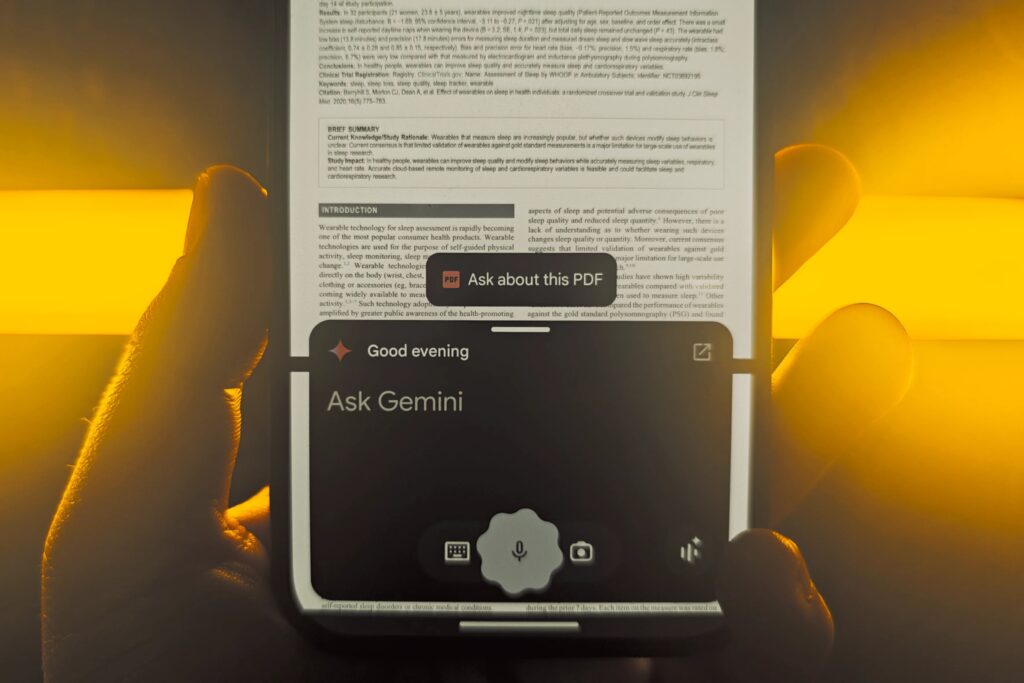

Whenever AI gets deeper access to our lives, privacy becomes the first concern. Modern assistants can see emails, calendars, photos, messages, files, and personal routines. Researchers have already raised alarms about emotional dependency on AI tools, as discussed in this Digital Trends analysis on human-AI relationships. Add cloud processing into the mix, and the risks feel even higher.

Apple’s answer is a hybrid approach. Some AI tasks will run directly on the device using local processing, similar to Google’s Gemini Nano. This keeps data entirely on your phone, but it also limits speed and complexity. More demanding requests still require cloud-based processing, and that is where Apple’s Private Cloud Compute comes in.

Apple says that Apple Intelligence will run on its own devices and private cloud servers, not Google’s infrastructure. These servers use custom Apple silicon and a security-focused operating system. Data is encrypted the moment it leaves your device and deleted as soon as the task is completed. Apple claims that no user data is stored or accessible, even to Apple itself.

In this setup, Gemini provides the intelligence, but Apple controls everything else. Your requests are processed on Apple’s servers, not Google’s, which helps maintain Apple’s long-standing privacy stance while still benefiting from Gemini’s capabilities.

What changes for Siri and Apple Intelligence

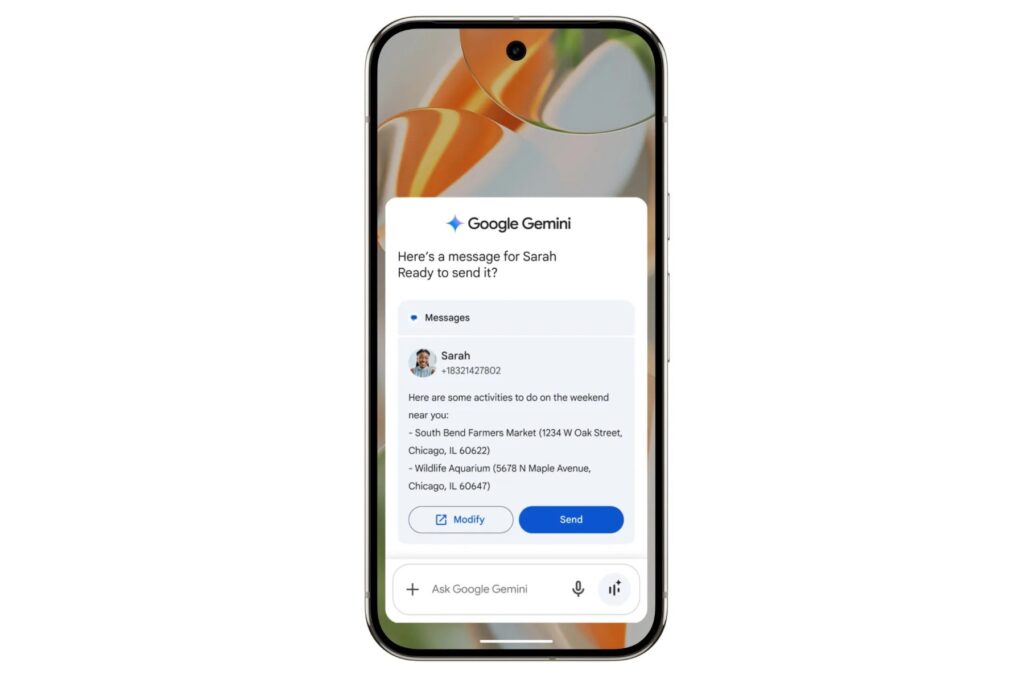

If you have ever compared Gemini on Android to Siri on iPhone, the difference is obvious. Gemini can summarize emails, search documents, send messages, and interact across apps with ease. Siri, on the other hand, often hands off anything complicated to ChatGPT or simply fails.

That gap is now closing. Gemini will enhance both Siri and Apple Intelligence, but Apple is not planning to slap Google branding across its software. The intelligence may come from Gemini, but the design, behavior, and experience will remain distinctly Apple.

This partnership goes deeper than voice commands. Apple plans to use Google’s AI tooling to develop the next generation of Apple Foundation Models. These models power features like writing assistance, image generation, summarization, and cross-app actions. Introduced in 2024 and improved a year later, they can run either on-device or in Apple’s private cloud.

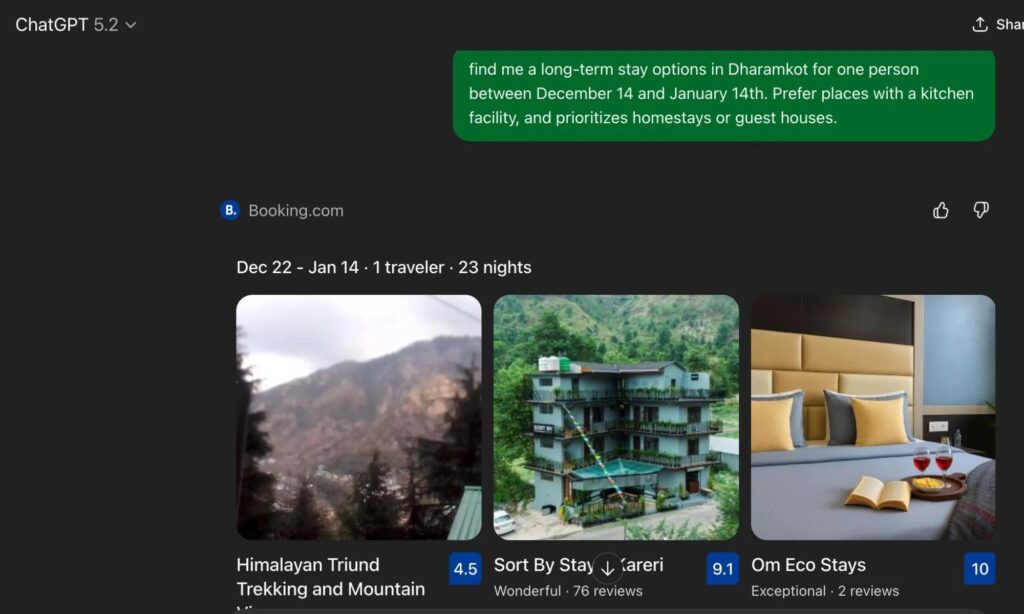

The real promise lies in how developers can use these models. Imagine opening Spotify and asking Siri to create a playlist based on your most played songs this month, without touching the app. Today, that kind of request is still hit-or-miss on iPhone. With Gemini-backed models, it becomes far more realistic.

Why this matters beyond Siri

One of Siri’s biggest weaknesses has been its limited understanding. Complex questions often get routed to ChatGPT, creating a fragmented experience. On Android devices like the Google Pixel, Gemini handles these tasks instantly and can take action inside other apps without breaking the flow.

For example, Gemini can send messages through WhatsApp, check calendar events, search email inboxes, and retrieve file contents with a single command. Google’s tight integration with Gmail, Calendar, Drive, and other services makes this feel effortless. Apple users have been watching from the sidelines.

Now, Apple has a chance to catch up. With Gemini powering its foundation models, Apple can finally deliver system-wide intelligence that works across Notes, Mail, Music, Photos, and third-party apps. Universal search could become more conversational. Tasks could be completed without manually opening apps. Context could finally carry over from one request to the next.

Apple already has App Intents, a framework designed to enable cross-app actions. Adoption has been slow, largely because the underlying AI was not smart enough. With Gemini handling on-device reasoning, developers may feel more confident building conversational features into their apps.

Some users have already seen a glimpse of this future through the ChatGPT app on iPhone, which allows cross-app actions when app connectors are enabled. But that setup requires linking third-party services to OpenAI, which raises its own privacy concerns. A built-in, OS-level solution from Apple would be cleaner, safer, and far more seamless.

Apple does not need to copy Google’s approach outright. Instead, it can study how Gemini works across Android and the web and adapt those ideas in a more restrained and thoughtful way. Apple has historically excelled at refining features rather than rushing them out, and this could be another example.

With Gemini now under the hood, Apple finally has the intelligence it needs to rethink Siri and Apple Intelligence from the ground up. The company can integrate AI into its apps and services in a way that feels natural instead of intrusive, avoiding the cluttered experiences seen with some competitors.

For users, this partnership signals a meaningful shift. Siri may finally become an assistant that understands intent, context, and follow-up questions. Apple devices could start feeling genuinely smarter, not just more automated. And with Apple’s privacy-first architecture still in place, the benefits of advanced AI might arrive without the usual trade-offs.